The rapid, effective, and efficient response to events that may compromise human health or environmental protection is today a primary requirement. The scientific and cultural convergence between e-sciences (i.e. sciences that have taken computational simulation as an equal instrument compared to laboratory analysis or field measurements) and technological tools based on Artificial Intelligence (AI) techniques is leading to what could be called “ai-science”.

In this scenario, high-performance computing, parallel, and distributed applications strengthen the already solid role of enabling technologies, without which rapid responses to intense phenomena or extreme environments and scenario hypotheses would not be feasible. The orchestration of computational resources is fundamental in the landscape of high-performance distributed computing performance and related applications. The strategic importance is motivated by the need to find the right balance between performance needs, availability of computation/storage, and management costs.

Scientific workflows currently in use

Scientific workflow engines for processing large amounts of data are the main orchestration tools, strong in architectural stability that leverage decades of innovation and evolution. Pegasus (National Science Foundation) defines workflows as abstract while leaving out details of the underlying execution environment or basic-level specifications requested by the middleware.

StreamFlow (University of Turin) is a workflow engine based on the Common Workflow Language (CWL) standard that natively uses containerization and allows having task executors that do not share the file system.

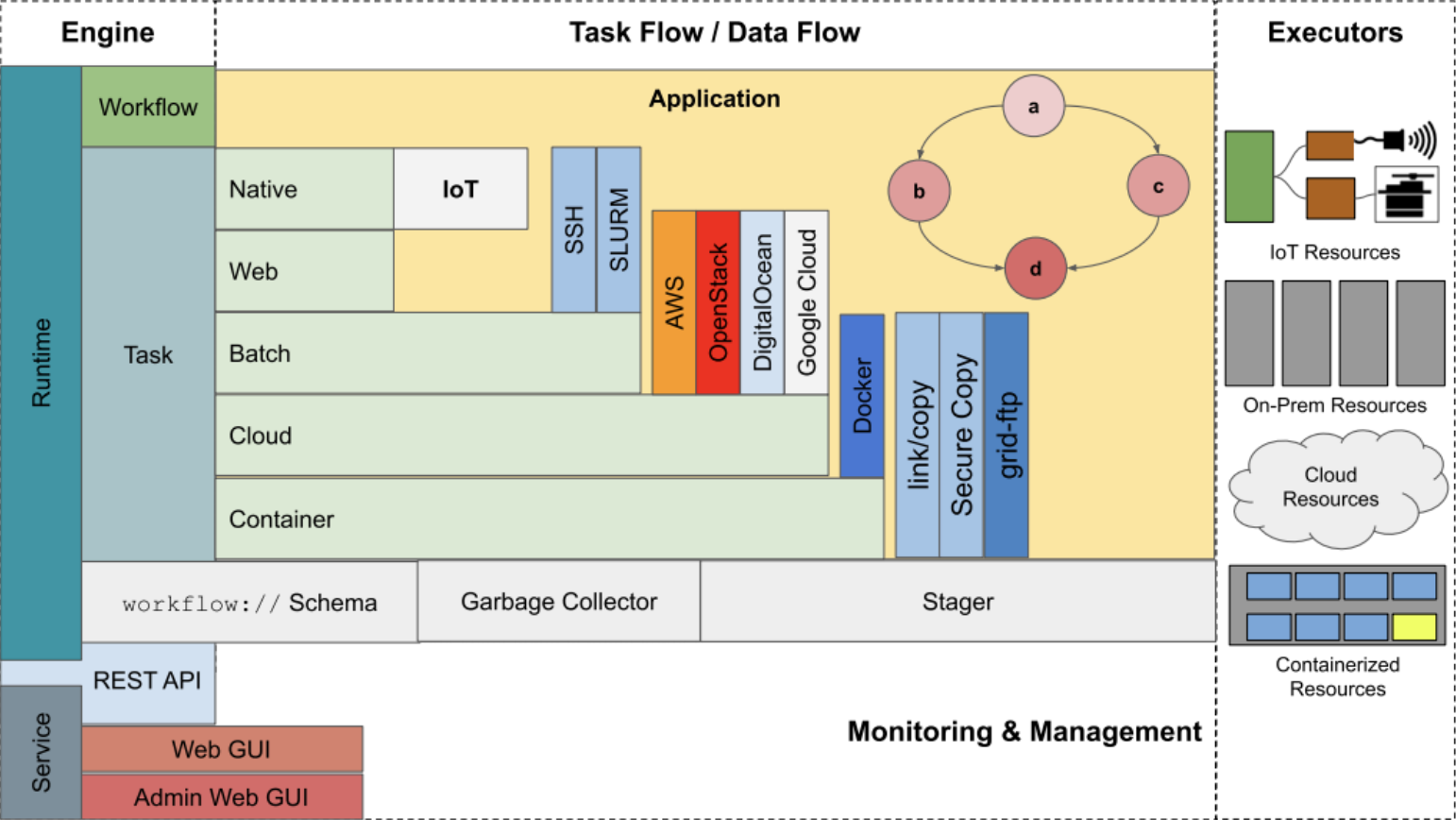

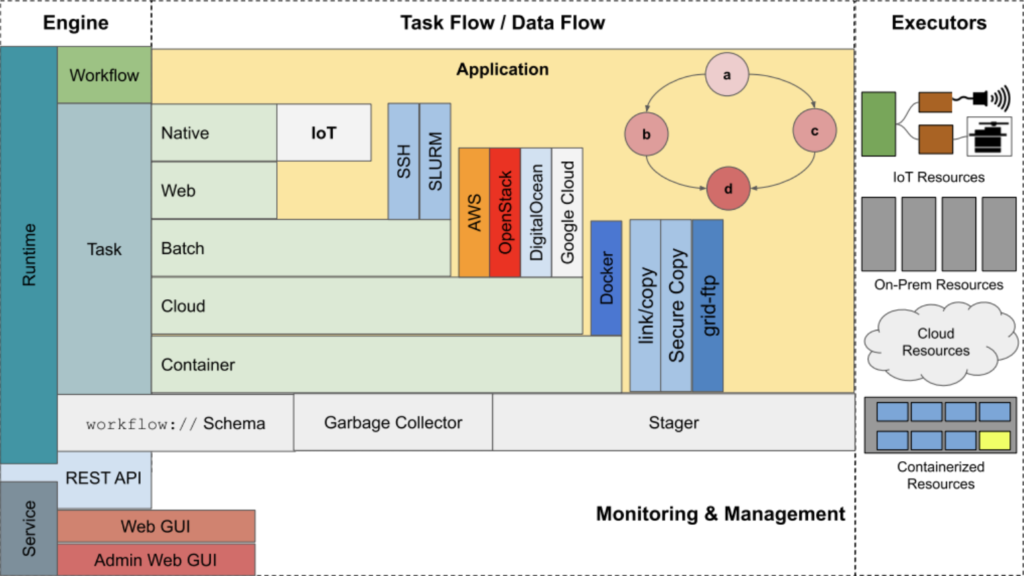

DagOnStar (University of Naples “Parthenope”) is a data-driven workflow engine based on workflow:// scheme capable of executing tasks on any combination of machines on-premises, on-premise high-performance computing clusters, containers, and cloud-based virtual resources. Currently, it is used to orchestrate computational resources for meteo@uniparthenope.

Downsides of current workflows

Most of today’s available workflow engines suffer from two bottlenecks:

1) the DAG, formally representing a workflow, determines the execution of a task when all data producer on which it depends have completed their execution and the data is entirely available;

2) the technology used to transfer the data from data-producer to data-consumer tasks is often based on shared file systems or data transfer through appropriate protocols. Ad hoc file systems that allow the federated use of RAM as storage instantiated by the user as a computational task can mitigate this last bottleneck. Nevertheless, the temporal dependence that binds the complete conclusion of a data producer task to the data consumer task remains unresolved.

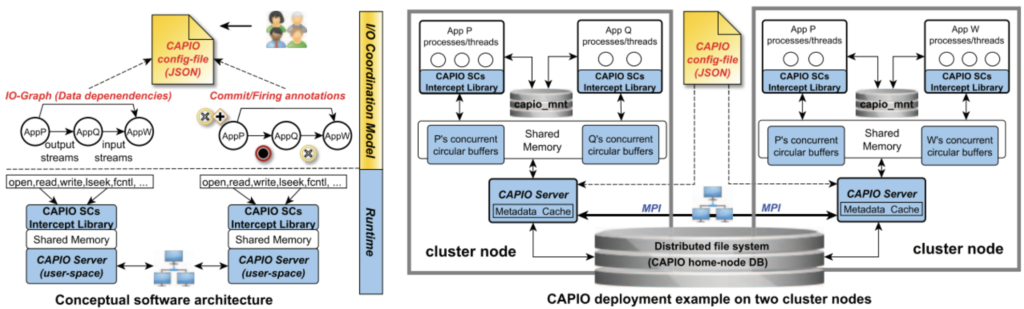

In this scenario, CAPIO (Cross-Application Programmable I/O, developed at the University of Pisa) represents a promising solution to the previously exposed problem.

Introducing CAPIO

CAPIO is a middleware capable of inserting streaming I/O functionality into workflows whose communication between tasks is file-based, improving compute-I/O overlap without the need to modify the application code. CAPIO implements what is de facto a file

ad hoc system capable of making file-based communication between tasks more effective and efficient.

Another feature of CAPIO is that the ad hoc file system is completely ephemeral and guarantees the complete deletion of what is stored at the end of the execution.

Innovation of DAGonCAPIO

This project sees the synergy between a data flow-based workflow engine, implicitly determined by the logical producer/consumer dependency of each task and CAPIO. The main cultural position of the lead research organization allows to contextualize the real application as a use case in an integrated system between fluid dynamics modeling and AI, usable in the following scenarios:

1) on-demand: transport and dispersion of passive tracers similar to pollutants for the timely response to episodes of accidental or voluntary spillage of substances into the sea, for example, an accident involving naval units for the transport of crude oil or petroleum products, through forecasting trajectories and places of stranding;

2) operational: application inserted in a context routine capable of predicting the transport and diffusion of passive tracers similar to pollutants released periodically, for example, from sewage systems, and related prediction of possible contamination of aquaculture systems.

References

- Deelman, Ewa, Karan Vahi, Mats Rynge, Rajiv Mayani, Rafael Ferreira da Silva, George Papadimitriou, and Miron Livny. “The evolution of the pegasus workflow management software.” Computing in Science & Engineering 21, no. 4 (2019): 22-36.

- Colonnelli, Iacopo, Barbara Cantalupo, Ivan Merelli, and Marco Aldinucci. “StreamFlow: cross-breeding cloud with HPC.” IEEE Transactions on Emerging Topics in Computing 9, no. 4 (2020): 1723-1737.

- Sánchez-Gallegos, Dante Domizzi, Diana Di Luccio, Sokol Kosta, J. L. Gonzalez-Compean, and Raffaele Montella. “An efficient pattern-based approach for workflow supporting large-scale science: The DagOnStar experience.” Future Generation Computer Systems 122 (2021): 187-203.

- Martinelli, Alberto Riccardo, Massimo Torquati, Marco Aldinucci, Iacopo Colonnelli, and Barbara Cantalupo. “CAPIO: a Middleware for Transparent I/O Streaming in Data-Intensive Workflows.” In 2023 IEEE 30th International Conference on High-Performance Computing, Data, and Analytics (HiPC), pp. 153-163. IEEE, 2023.